Hardware Security

Hardware security focuses on finding and addressing potential security issues from a standpoint of systems' phisical layers. In SPQR Lab, we conduct research projects on hardware security of acoustic hardware attacks and medical device security.

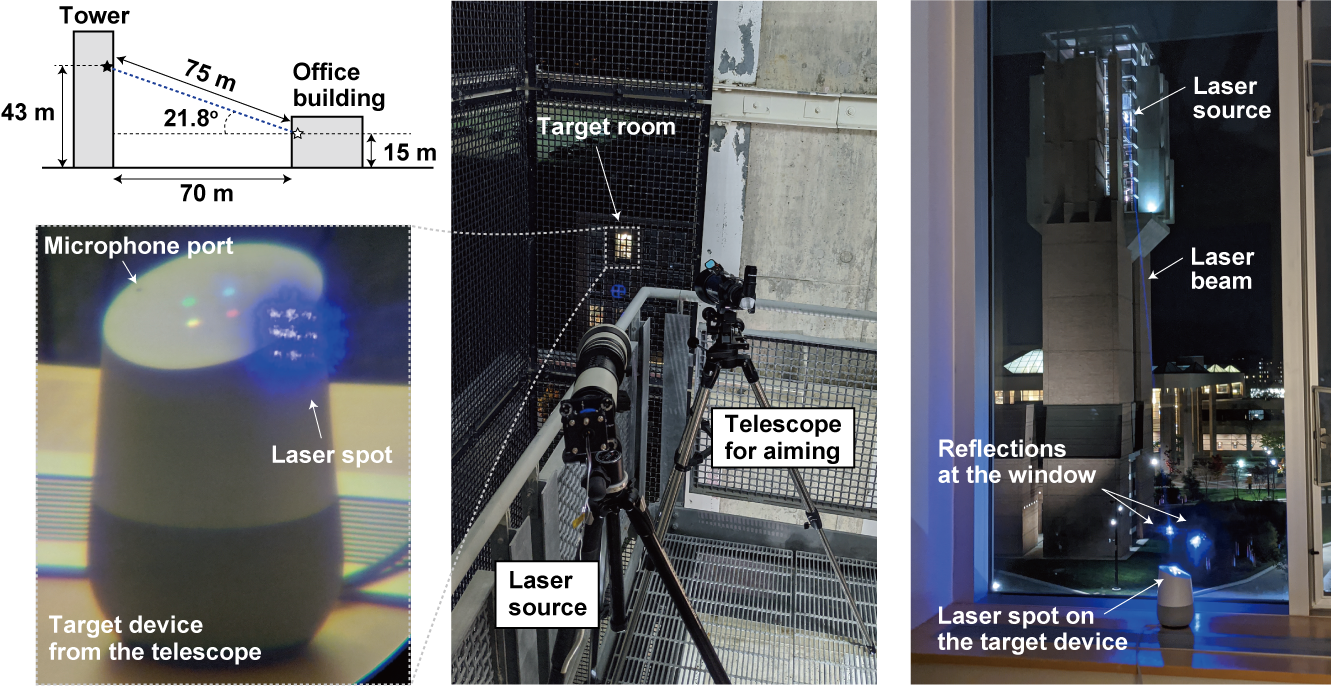

Light Commands: Laser-Based Audio Injection Attacks on Voice-Controllable Systems

Using modulated laser light as a means for acoustic signal injection into MEMS microphones, we show that voice-controllable systems can be exploited from long distance and through windows. This vulnerability to light is inherent to MEMs microphones, and we present several defenses that can be used to defend voice-controllable systems.

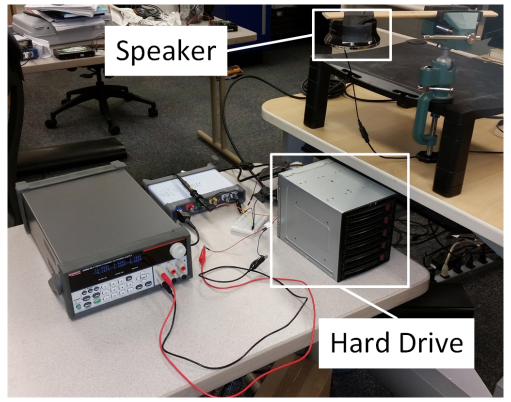

Hard Drive of Hearing: Disks that Eavesdrop with a Synthesized Microphone.

We demonstrate that the mechanical components in magnetic hard disk drives behave as microphones with sufficient precision to extract and parse human speech. We also present defense mechanisms, such as the use of ultrasonic aliasing, that can mitigate acoustic eavesdropping by synthesized microphones in hard disk drives.

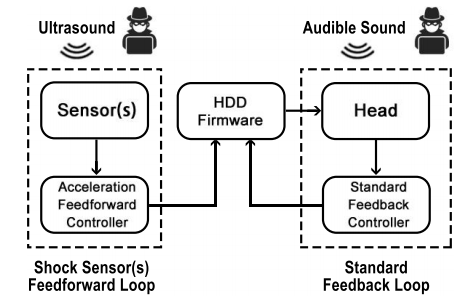

Blue Note: How Intentional Acoustic Interference Damages Availability and Integrity in Hard Disk Drives and Operating Systems.

We show that intentional acoustic interference causes unusual errors in the mechanics of magnetic hard disk drives in desktop and laptop computers, leading to damage to integrity and availability in both hardware and software such as file system corruption and operating system reboots. To defende such attacks, we created and modeled a new feedback controller that could be deployed as a firmware update to attenuate the intentional acoustic interference.

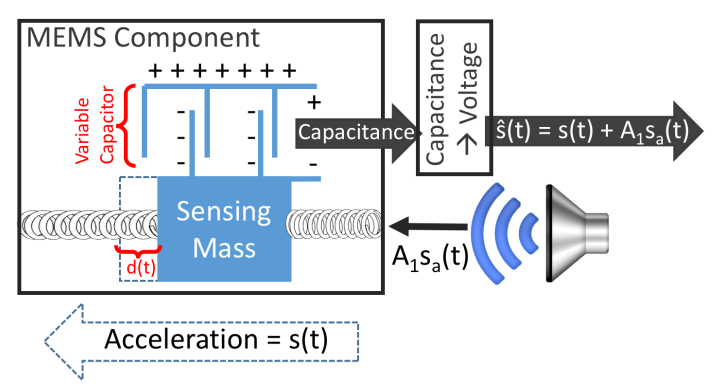

Walnut: Acoustic Attacks on MEMS Sensors

We investigate how analog acoustic injection attacks can damage the digital integrity of a popular type of sensor: the capacitive MEMS accelerometer. Spoofing such sensors with intentional acoustic interference enables an out-of-spec pathway for attackers to deliver chosen digital values to microprocessors and embedded systems that blindly trust the unvalidated integrity of sensor outputs.