The Security and Privacy Research Group at Northeastern University in Boston is led by Prof. Kevin Fu. Our world-class team members conduct advanced research to protect the cybersecurity of healthcare delivery, medical devices, and sensor physics. We work broadly on research problems pertaining to embedded security. We explore the research frontiers of computer science, electrical and computer engineering, and healthcare. Our healthcare cybersecurity research tests how to protect hospitals and medical devices from cyberthreats and asks not how to use existing security products, but rather how to change the way we think about security and disrupt the business models of today. Our sensor physics research examines how to protect analog sensors from intentional electromagnetic, acoustic interference, and light injection.

Light Commands

Light Commands is a vulnerability of MEMS microphones that allows attackers to remotely inject inaudible and invisible commands into voice assistants, such as Google assistant, Amazon Alexa, Facebook Portal, and Apple Siri using light. In our paper we demonstrate this effect, successfully using light to inject malicious commands into several voice controlled devices such as smart speakers, tablets, and phones across large distances and through glass windows.

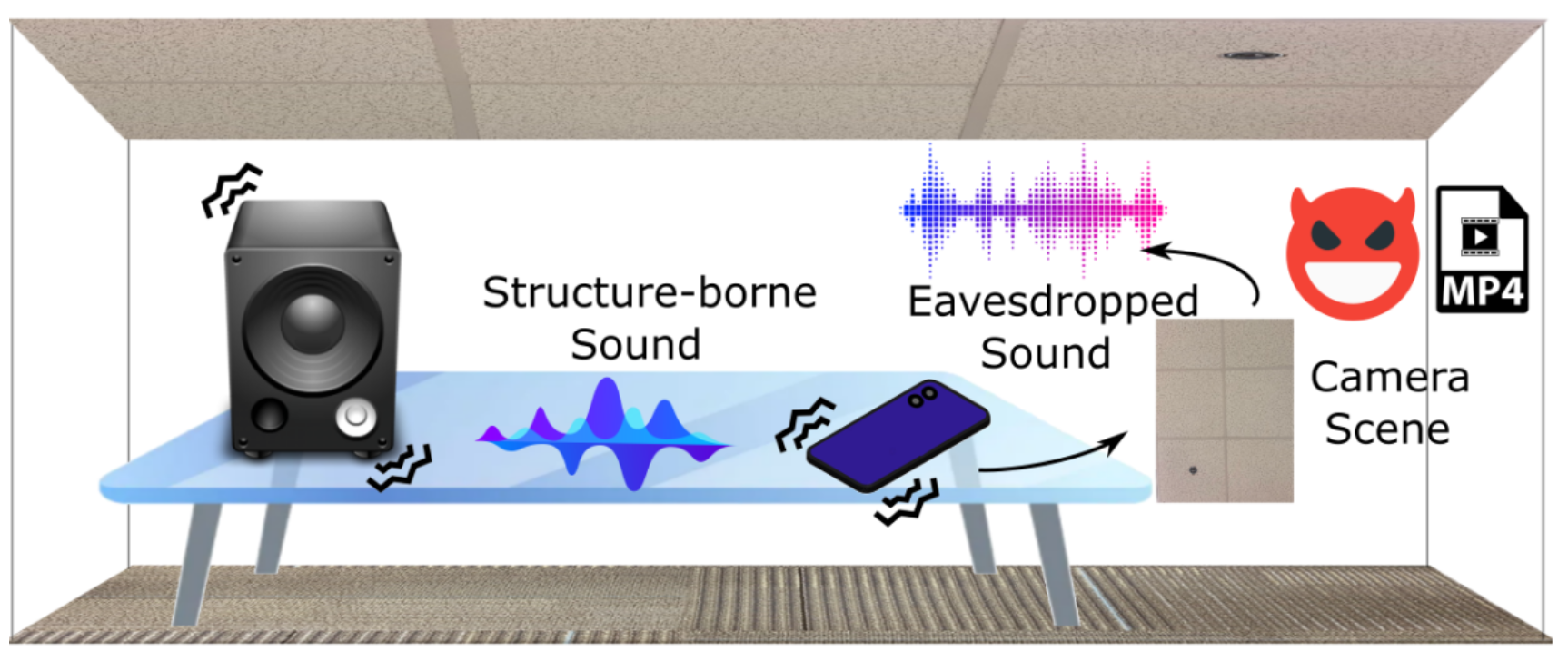

Side Eye: Extract Sound from Muted Videos

Side Eye is a technique that allows adversaries to extract acoustic information from a stream of camera photos. When sound energy vibrates the smartphone body, it shakes camera lenses and creates tiny Point-of-View (POV) variations in the camera images. Adversaries can thus infer the sound information by analyzing the image POV variations. Side Eye enables a family of sensor-based side channel attacks targeting acoustic eavesdropping even when smartphone microphone is disabled.

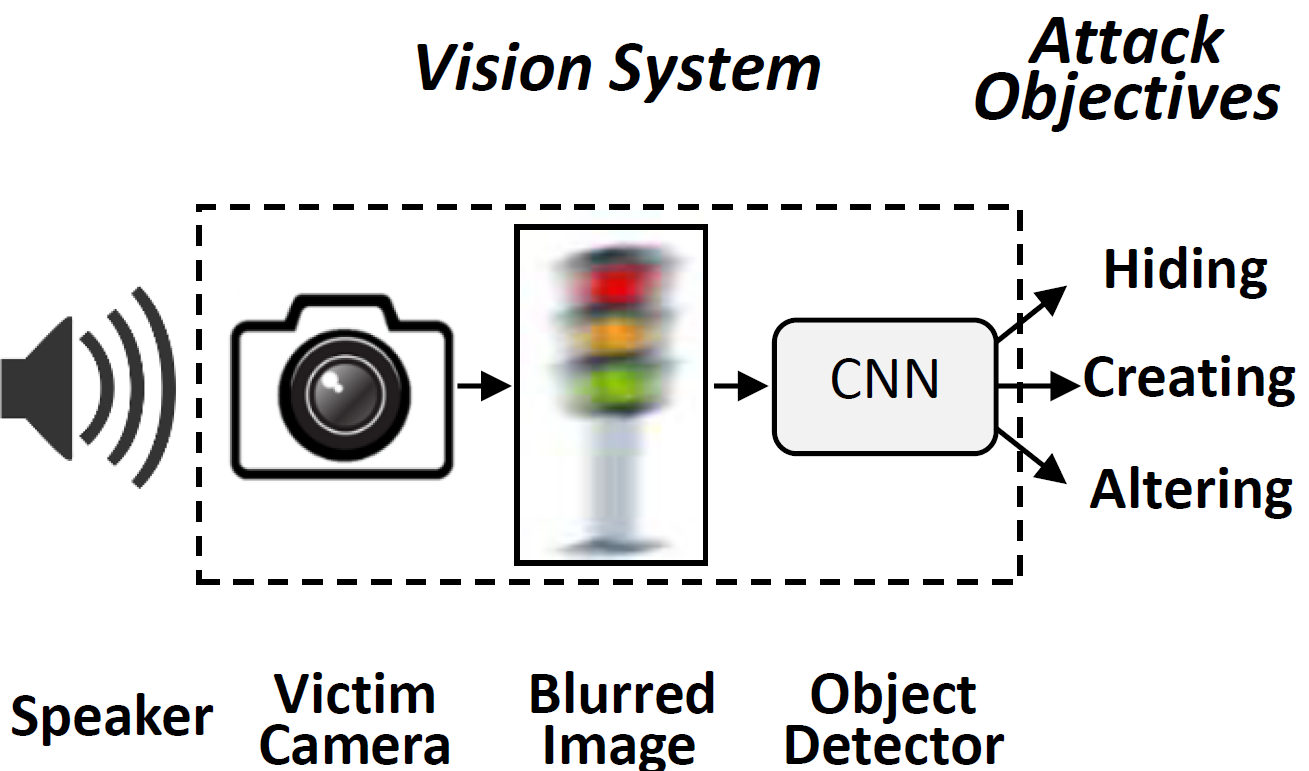

Poltergeist: Manipulating Computer Vision Systems with Acoustics and Motion

As cameras become increasingly pervasive, the security and privacy of computer vision systems is a focus of SPQR's research. Our work explores how different physical processes such as acoustics and motion are captured and interpreted by cameras, and how malicious parties may exploit these effects to intentionally adulterate vision systems’ output or exfiltrate sensitive information from it. Our recent research shows acoustic injections into cameras may cause accidents to autonomous vehicles.